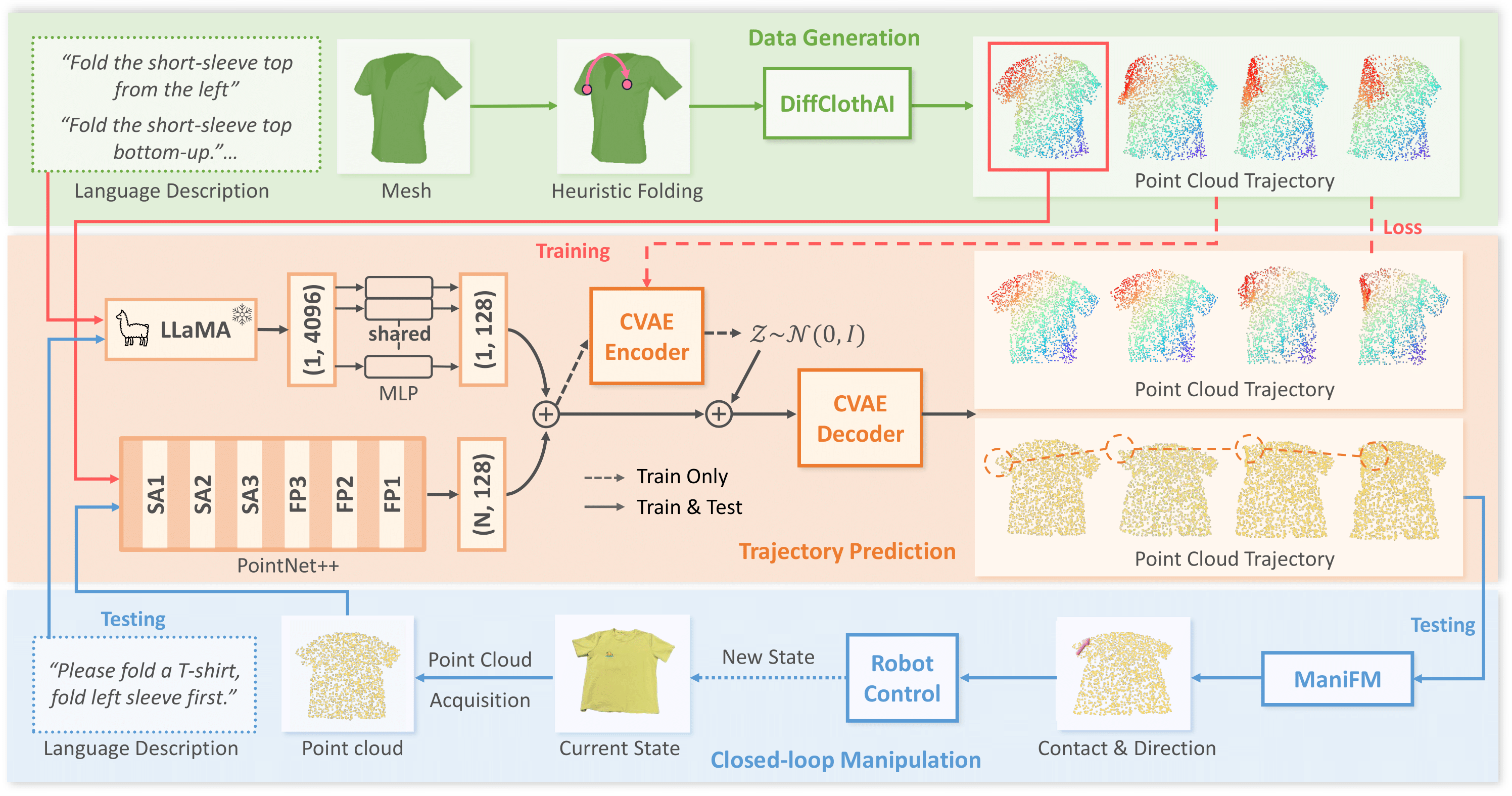

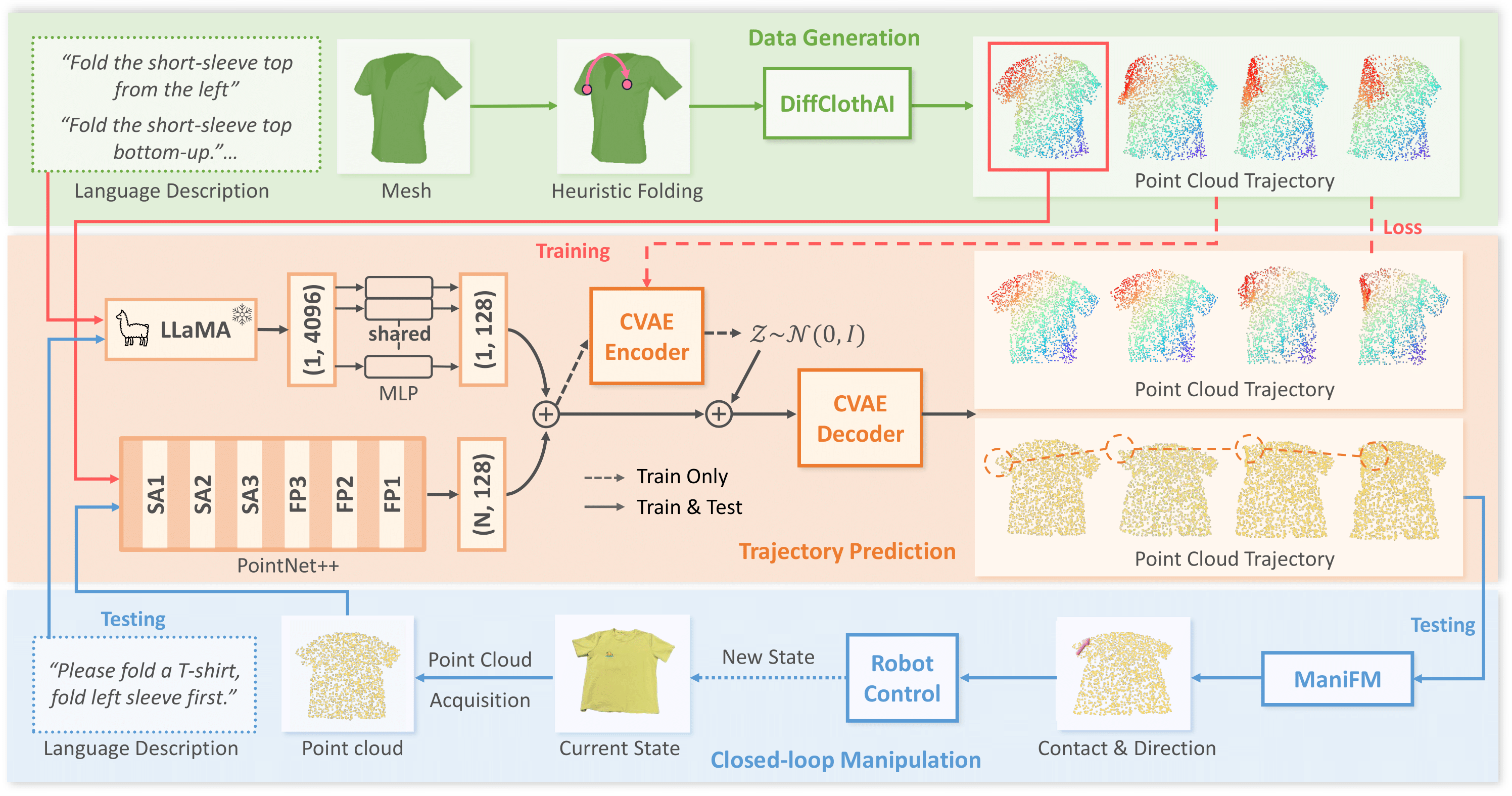

Pipeline Overview

1 National University of Singapore 2 NUS Guangzhou Research Translation and Innovation Institute

3 Nanjing University 4 Peking University 5 Shanghai Jiao Tong University

* denotes equal contribution † corresponding author

Garment folding is a common yet challenging task in robotic manipulation. The deformability of garments leads to a vast state space and complex dynamics and complicates precise fine-grained manipulation. Previous approaches often rely on predefined key points or demonstrations, constraining their generalizability across diverse garment categories. This paper presents a framework, MetaFold, that disentangles task planning from action prediction, learning each independently to enhance model generalization. It employs language-guided point cloud trajectory generation for task planning and a low-level foundation model for action prediction. This structure facilitates multi-category learning, enabling the model to adapt flexibly to various user instructions and folding tasks. Experimental results demonstrate our proposed framework's superiority.

We present visualizations of several garment folding trajectories from the MetaFold Dataset. These garments include NoSleeve, ShortSleeve, LongSleeve, and Pants. Each row contains three different garments; dragging the slider beneath a garment reveals its folding process. Selecting the action on the right side of each row visualizes the subsequent folding stages. NoSleeve has only one folding stage, ShortSleeve and LongSleeve each have three stages, and Pants has two stages.

No-Sleeve 1

00/20No-Sleeve 2

00/20No-Sleeve 3

00/20Short-Sleeve 1

00/20Short-Sleeve 2

00/20Short-Sleeve 3

00/20Long-Sleeve 1

00/20Long-Sleeve 2

00/20Long-Sleeve 3

00/20Pants 1

00/20Pants 2

00/20Pants 3

00/20@misc{chen2025metafoldlanguageguidedmulticategorygarment,

title={MetaFold: Language-Guided Multi-Category Garment Folding Framework via Trajectory Generation and Foundation Model},

author={Haonan Chen and Junxiao Li and Ruihai Wu and Yiwei Liu and Yiwen Hou and Zhixuan Xu and Jingxiang Guo and Chongkai Gao and Zhenyu Wei and Shensi Xu and Jiaqi Huang and Lin Shao},

year={2025},

eprint={2503.08372},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2503.08372},

}